Visualizing recursion

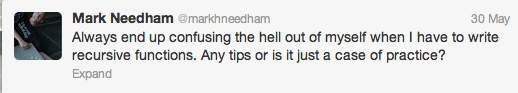

Mark Needham asked on twitter:

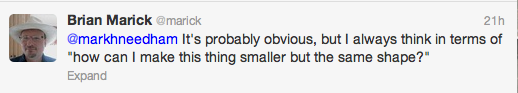

I replied:

He replied:

Since I’m in an explaining sort of mood this week, let me see if I can help.

Read the rest of this entry »

Mark Needham asked on twitter:

I replied:

He replied:

Since I’m in an explaining sort of mood this week, let me see if I can help.

Read the rest of this entry »

Prompted by Avdi Grimm’s post on underscores in program identifiers, I’ll publicly air my guess about why theBlightOfCamelCaseInfestsOurPrograms when_identifiers_with_underscores_are_obviously_more_readable. (Although Avdi is right that dashes are superior to both.)

As far as I can tell, the popularity of camel case came from Java, which got it from Smalltalk. But where did Smalltalk get it, and why did such smart people go so badly astray?

Flash back to 1979-1981. I was a computer operator for a PDP-10 mainframe at the University of Illinois Coordinated Science Lab. (I still have two pieces of that computer in my basement.) We had a bunch of VT-100 act-alike terminals. But off in a corner, where no one ever used it, we had a single terminal we called “the European terminal”. One interesting thing about the European terminal was that it had no underscore key. Instead, it had a back-arrow key. I have a faint recollection that if you viewed text with underscores on that terminal, it would show up with back arrows instead. (Or was it that the keyboard did have an underscore on it, but when you pressed it, it displayed a back arrow?)

It’s unlikely you’d write←identifiers←with←back←arrows←in←them and, in any case, some programming languages (such as Smalltalk) use back arrow to mean assignment (instead of, as was common before C took off, `:=`).

All of which makes me think—especially since Smalltalk came from Simula, and Simula came from Europe—was there no underscore available to the people who built Smalltalk back in its early days? Was that why they fell back on StudlyCaps?

(I even have a very dim memory of some language—I don’t think it was Smalltalk—where underscore, as well as a back arrow, could be used for assignment.)

Does anyone know?

I’ve been thinking about what talk I might give at Software Craftsmanship North America this year. I have a reputation for “Big Think” talks that pull together ideas from not-software fields and try to apply them to software. I’ve been trying to cut back on that, as part of my shift away from consulting toward contract programming. Also, I’ve heard rumblings that SCNA is too aspirational, not enough actionable.

However, old habits die hard, and I’m tempted to give a talk like the following. What do you think? Should I instead talk about functional programming in Ruby? Since this antique Wordpress installation makes commenting annoying, feel free to send me mail or reply on Twitter (@marick).

Persuasion, Scientists, and Software People

Science, as practiced, is a craft. Even more, it’s a high-stakes craft: scientists have to make big bets by joining in on what Imre Lakatos called “research programmes”. (A research programme is a Big Theory like Newtonian physics, quantum mechanics, Darwinian evolution, Freudian psychology, the viral theory of cancer, and so on.) It’s interesting to learn how scientists are persuaded to make those big bets.

It may also be useful. There are similarities between scientists and software people that go beyond how software people tend to like science and to be scientifically and mathematically inclined. Scientists build theories that let them build more theories. They build theories that let them build experiments that help them build more experiments. Software people build programs that help them build programs—and help them build theories about programming. So ways scientists are persuaded might also be ways software people can be persuaded.

If so, knowing how scientists persuade each other might help you persuade people around you to risk joining in on your craftsmanship “programming programme”.

In this talk, I’ll cover what the science students Imre Lakatos, Joan Fujimura, and Ian Hacking say about how science progresses. I’ll talk about viruses, jUnit, comets, Cucumber, Mercury’s orbit, Scrum, and positrons.

In the 1980’s, I was interested in metrics, maybe even a fan, and cyclomatic complexity was trendy. I was never entirely comfortable with it: all the justification in terms of “basis paths” seemed hand-wavily disconnected from the reality of code, and the literature seemed to go out of its way to obscure the fact that the cyclomatic complexity of a routine is just the number of branches + 1 (including the implicit branches in boolean statements like a && b). (I don’t remember if there are any restrictions on that identity.)

Then I read a paper in CACM on the cyclomatic complexity of Unix commands, and it was quite a revelation. You see, I worked for a Unix porting shop. We were regularly presented with broken commands whose code we didn’t know well, commands we had to dive into and fix. Two of the commands whose code was ranked least complex by the paper were notorious among us for being hard to understand. Otherwise fearless programmers wept like children when told they’d have to work on `/bin/sh` and `adb`.

Why were those two commands so hard to understand, even if not complex? The one I remember best was `/bin/sh`. This was an older version, written by Steve Bourne, I believe. At that time (I assume he’s gotten over it), he was enamored with the idea of turning C into Algol using the C preprocessor.

That is, his code included a header file that looked like this:

#define BEGIN {

#define END }

#define AND &&

That meant the parts of C that are easy to understand were defined into another language. But you can’t do that with the parts of C that are hard to understand—”Is this a pointer to a function returning an array or a pointer to an array of functions?”—so all that was left (mostly) alone. The end result was like a combination of German and Sanskrit, which turns out to be hard to read even if you know both languages.

(As I recall, the “Algol” part wasn’t just a matter of lexical substitution: it also also meant using Algol idioms instead of familiar C ones. I don’t remember the details, but it might have been things like not using C’s null-terminated strings and malloc/free, but instead inventing new libraries to learn.)

None of this affected the branchiness of the `/bin/sh` code, so it didn’t affect the cyclomatic complexity. It just made the job of understanding the code much more, um, complex.

I don’t remember as much about `adb`. I think it was partly the complexity of the domain (it was an assembly-level debugger), partly that it was heavily data-driven (so that the complexity was in the setup and interconnection of data, not in the code that then processed it).

The point of all of this is that merely using a word from the domain of human psychology allows you to import, under cover of night, tons and tons of assumptions, making them harder to notice. By saying “complexity” instead of “branchiness”, the purveyors of one simplistic measure were able to make it seem profound. They could hide, perhaps even from themselves, the fact that they were, at best, feeling one part of the elephant.

—

The same applies, I suspect, to the common statement that “functional programs are easier to reason about.” I happen to believe that’s a heck of a lot closer to being true than that complexity is all about branchiness, but still: it’s important to realize that the motivation for that broad statement has historically been the much narrower claim that functional languages make it easier to prove that programs satisfy their specifications.

When most of us slap down our coffee cup next to the keyboard and open up some code in the editor, our conscious goal is not to make proofs. It’s to accomplish other ends. Therefore, a straight extrapolation from “easier to write proofs about” to “easier to reason about” is a big leap. It smuggles in an assumption that “to reason” is one sort of thing, and that one sort of thing is formal logical deduction. That’s a really strong and dubious claim about the nature of thought, one that hasn’t led to overwhelming practical success when used in, oh, artificial intelligence or as logical positivism.

As usual, we ought to leave the grand claims about “the way humans are” or “the way that it is best to live/work” to psychologists and preachers. Amongst ourselves, perhaps we should just say things like “I’ve been doing this one kind of fairly specific thing recently, and I’ve been surprised to find that X has been really helpful to me. Maybe it will help you too.”

I was trying to figure out what functional reactive programming (FRP) is. I found the descriptions on the web too abstract(*) and the implementations to be in languages that weren’t easy for me to use. I could have been more patient, but I’ve always found rewriting (documentation and implementation) a good way to understand, so I (1) implemented my own (extremely trivial) library in Ruby and (2) changed the names to ones that made sense to me (since I found the terms commonly used to be more opaque tokens than ones that pointed me toward meaning).

And—why not?—I put the code up on github. I also wrote the documentation/tutorial I wish that I’d found early on.

I should point out that it’s quite possible I never really did understand FRP, making my tutorial a Bad Thing.

(*) I don’t mean to criticize that abstract descriptions. They were written by people in particular interpretive communities for other members. I just happen not to be one of those members.

Based on (mostly twitter) response to my note asking for information on composed refactorings, I’ve concluded there’s a gap in the knowledge-market, and I’ve decided to see if I can fill it.

The topic is medium-scale refactorings, ones composed from the kind of Opdyke/Fowler-style “atomic” refactorings that tools can implement. Such medium-scale refactorings can be done in, say, an hour to a day in response to needs that I think of as vaguely “small-scale architectural” (things like the need for layers that cross-cut many vertical slices, or separation of responsibilities into multiple cooperating classes, or the desire to segregate behavior into a pluggable state machine pattern, etc).

As with small-scale refactorings, the goal is to make the change safe in the sense of having a set of tests that always pass. It’s a lower risk and lower disruption (but perhaps slower) approach than discarding and rewriting.

Two aspects particularly interest me:

How do you get good at imagining the “path” from where your code is now to where you want it to be? (A good path wouldn’t leave you stuck halfway with the only way forward being a big, risky change to a bunch of code. It also is one that holds open the possibility of discovering an even better, unanticipated path halfway through.)

People who prefer rewriting to refactoring sometimes say they fear that refactoring gets them “stuck at a local maximum”. I can imagine that: I see what my code is like now, I can see how it would be better, but I can’t see a refactoring path from here to there. But, because I’m hooked on the feeling of safety refactoring gives me, I don’t improve the code.

So it’s worth exploring how often a really good refactorer (not me - yet!) will be unable to see any path. What characterizes such hard cases?

And there are related questions: once you can really do changes well both ways (refactoring and rewriting), what are the tradeoffs? how do you decide which to do?

The first way I want to address the topic is via a refactoring code retreat, patterned roughly after Corey Haines’ code retreats. There have to be differences, though. The point of a code retreat is that you write the code from scratch and throw it away when you’re done. The point of a refactoring code retreat has to be that you start from a code base and transform it. That means someone — that is, me — has to provide the starting code. I’m beginning to do that here. There’s currently one Ruby exercise, complete with instructions.

How can you help?

Try out the split-into-two example. (You can get a tarball or zipball here.) Join the mailing list and post your reaction. (Too simple? Too unclear? Needs a dash of X to make it more realistic? Etc.) [I’ll also take pull requests.]

Give me an example of a medium-scale refactoring you’ve done yourself. A written description at the level of detail of the sections labeled “The app” and “The story” in the split-into-two example would be great. So would a pointer to two commits on Github (or equivalent) and a brief note like “Look at how classes A, B, C changed into A, B, D, and E.”

I’m thinking of having all the examples in Ruby, Java, and JavaScript. Help translating them would be cool. Examples in other languages might also be fun.

I’m concentrated on traditional object-oriented refactoring, but I’m also interested in how refactoring applies in functional languages like Clojure. Got examples?

I’ll need a local host for a first trial workshop. Chicago area preferred.

A quote from Wilfrid Sellars:

The aim of philosophy, abstractly formulated, is to understand how things, in the broadest possible sense of the term, hang together, in the broadest possible sense of the term. […] To achieve success in philosophy would be, to use a contemporary turn of phrase, to ‘know one’s way around.’ [Two commas added for clarity]

In a biographical article, Willem deVries expands on that:

Thus, philosophy [to Sellars] is a reflectively conducted higher-order inquiry that is continuous with but distinguishable from any of the special disciplines, and the understanding it aims at must have practical force, guiding our activities, both theoretical and practical. [Emphasis mine]

That seems to me not horribly askew from what I’m starting to understand as the aim of software architecture. I’d say something like this:

The aim of architecture is to describe how code, in a broad sense of the term, hangs (or will hang) together, in a broad sense of the term. The practical force of architecture is that it guides a programming team’s activities so as to make growing the code easier.

This definition discards the idea of architecture as some etherial understanding that stands separate from, and above, the code. Architecture isn’t true, it’s useful, specifically for actions like moving around (in a code base) and putting new code somewhere.

I also like the definition because it lets me bring in Richard Rorty’s idea that conceptual change is not effected by arguing from first principles but by telling stories and appealing to imagination. So architecture is not just “ankle bone connected to the leg bone“–it’s about allowing programmers to visualize making a change.

The client I was at for the last three weeks has enough teams working on a single code base that they have an architect role (indeed, a distinct architecture team). That’s made me wonder what I would do were I on that team, given my awfully iterative, refactor-your-architecture-into-being biases.

About those biases

The way I was taught to do architecture was like this:

You think and think and think, and design an infrastructure that will support the needed features. Then you add the features, one by one. The first part is the hard part, which makes the second part easy.

That approach still has enormous appeal for me, but it’s really hard to pull it off. The design is so often wrong, and the features don’t require what you expected, and the infrastructure needs to be changed, but you had no practice changing it, so it’s hard to change it right, and the lesser programmers who are supposed to be doing the features are standing around tapping their feet, or (worse!) they’re not standing around, they’re implementing the features anyway and doing all sorts of hackery to make up for the infrastructure failings, and you were supposed to be this godlike disembodied intelligence that Gets It Right, but now everyone scorns you.

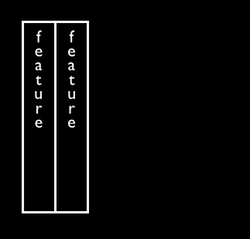

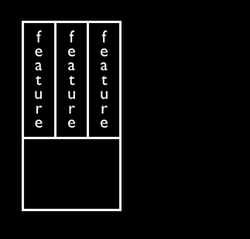

So the safer approach is to start with a single feature and implement it as a “vertical slice” (in a web app, from javascript down to database):

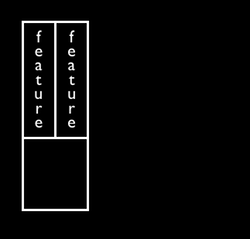

Now you implement the next slice:

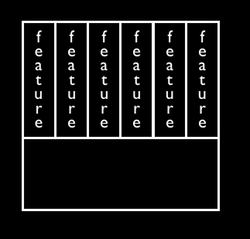

Amazingly, the second slice does some things quite similar to what the first one did. Either during the slice’s development or just after, factor that commonality out into shared code:

Repeat the process with each new slice, constantly finding and factoring common behavior:

The end result is a system with an infrastructure:

Moreover, if you do it well, that infrastructure will look like it was designed by some really smart person who provided exactly (and only) the features needed by the current implementation. And it’s an infrastructure that’s well tuned to allow the sorts of changes and growth that the business will demand in the future (because it was built by responding to those sorts of changes in the past).

How often does this work superbly? Probably about as often as big-architecture-up-front does, but it seems to be less risky and (I’m inclined to believe) gets closer to a really good architecture more often.

What would I do?

My first (and ongoing) task as an architect would be modest. I would pair with people on the implementation of vertical slices, concentrating on being a moving information radiator. In some ways, an architecture is nothing but a bunch of libraries, but any library so restrictive that it can’t be misused is one that can’t be used well. People need to know how to use a library, and the best way of teaching them is to sit with them and show them. An effective project has a common style. It’s everyone’s job to develop and communicate that style, but it surely seems an architect ought to pay special attention to it.

I would also be an agent of intolerance. Programmers are way too tolerant of programming experiences that grate, that throw up friction to seemingly every action. They tweet that they spent the whole day shaving a yak as if that were a mildly humorous thing instead of an outrage. As I programmed with my pair, I would insist we remove the friction for the next person who’ll have to do something similar. If it means we miss our story estimate (we’re working on a real vertical slice, remember), so be it: I will model a calm resolve.

If I do this well, all the teams will know they certainly have permission to solve the many small problems that would otherwise slow them down. They won’t wait for someone else (the architect!) to fix them.

Having enlisted all the teams in putting out small fires, would I then leap toward grand architectural visions? Not if I could avoid it. I’d rather work on solving medium-scale problems. Those are problems that even I wouldn’t be willing to solve under the auspices of a single story.

Here might be a scenario: as before, I’m pairing with someone. We notice the need for some abstraction or other solution, one that’s too big to get right during this story or iteration. We can still do three things, though: (1) imagine what a good solution would look like, (2) imagine what sort of smaller steps might get us there, and (3) take the first step. We end up with a delivered story, some rough plans for the future, and code whose structure is more than the minimum required. For example, we might have pushed code out into a class in a new package/namespace. And we might have changed both our new code and some existing code similar to it to use that new package.

Now, I as architect will actively look for new stories that give excuses to take further steps toward the good solution. I’ll pair with people on those stories. We’ll use the stories as an excuse to flesh out the previous solution (in a way that either doesn’t break previous stories or adapts them to use the fleshed-out solution).

I fully expect that the original “good solution” will look somewhat naive partway through this process. That’s fine. If I’m an architect, I’m supposed to be good at adjusting my path as I traverse it.

Eventually, we have a decent solution, some vertical slices that use it, some vertical slices that don’t use it, and some vertical slices that use it in a sort of halfway, intermediate, not-quite-right way. It’s my job to make sure code doesn’t get frozen in that state. Partly that’s a matter of helping on stories that give us an excuse to fix up some vertical slice. Partly that’s making sure my pairing results in ever more people who know how to use the solution and are sure to change code to use it when they get the excuse. (That’s the information radiator and agent of intolerance roles as applied to this one solution.)

What about the grand architectural vision? I don’t know. I’ve factored out medium-scale solutions, but I don’t have the personal experience to know how you get from one major architecture to a completely different one. I want to get vicarious experience in that this year.

So, I’d be nervous about big picture architecture, were I an architect. However, I’d take some comfort in knowing that we’d almost certainly be using frameworks that suggest their own architecture (Rails being a good example of that), and that we wouldn’t go too far wrong if we just did what our toolset wanted us to. (So, even though I’m personally fashionably skeptical of the value of object-relational mapping layers, I’d be conservative and use ActiveRecord, though I’d probably lean toward a development style like Avdi Grimm’s Objects on Rails.)

Still, there are two things I’d emphasize, if only out of fear of my own inexperience:

I do surely think that product directors should emphasize doing high-business-value work early and low- or speculative-business-value work later. However, as an architect, I’d be on the lookout for minimal marketable features that would stress the architecture, and I’d sweet-talk the product director into scheduling them earlier than she otherwise might. (Said sweet-talking would be easier because I’d always be concentrating on reinforcing the gift economy structure that makes Agile work.)

There exists an architectural change cycle that wastes endless amounts of money: humble programmers work within a given architecture, Architects That Be decree there will be a new one, programmers continue to work in old architecture because the new one isn’t available, the new architecture appears, programmers begin to use it, it turns out to have serious flaws, now the system has some code in architecture 1 and in architecture 2, all of which will be superseded by the “This time, definitely” architecture 3, etc.

I’d hope to force myself to roll out any new architecture gradually (in increments, as it was developed). That imposes extra cost on architecture development and somewhat reduces the possibility of change, but my bet would be the business cost of that constraint would be less than that of a Big Bang rollout.

Tools like Fit/FitNesse, Cucumber, and my old Omnigraffle tests allow you to write what I call “rhetoric-heavy” business-facing tests. All business-facing tests should be written using nouns and verbs from the language of the business; rhetoric-heavy tests are specialized to situations where people from the business should be able to read (and, much more rarely, write) tests easily. Instead of a sentence like the following, friendly to a programming language interpreter:

given.default_payment_options_specified_for(:my_account)

… a test can use a line like this:

I have previously specified default payment options.

(The examples are from a description of Kookaburra, which looks to be a nice Gem for organizing the implementation of Cucumber tests.)

You could argue that rhetoric-heavy tests are useful even if no one from the business ever reads them. That argument might go something like this: “Perhaps it’s true that the programmers write the tests based on whiteboard conversations with product owners, and it’s certainly true that transcribing whiteboard examples into executable tests is easier if you go directly to code, but some of the value of testing is that it takes one out of a code-centric mindset to a what-would-the-user-expect mindset. In the latter mindset, you’re more likely to have a Eureka! moment and realize some important example had been overlooked. So even programmers should write Cucumber tests.”

I’m skeptical. I think even Cucumber scenarios or Fit tables are too constraining and formal to encourage Aha! moments the way that a whiteboard and conversation do. Therefore, I’m going to assume for this post that you wouldn’t do rhetoric-heavy tests unless someone from the business actually wants to read them.

Now: when would they most want to read them? In the beginning of the project, I think. That’s when the business representative is most likely to be skeptical of the team’s ability or desire to deliver business value. That’s when the programmers are most likely to misunderstand the business representative (because they haven’t learned the required domain knowledge). And it’s when the business representative is worst at explaining domain knowledge in ways that head off programmer misunderstandings. So that’s when it’d be most useful for the business representative to pore over the tests and say, “Wait! This test is wrong!”

Fine. But what about six months later? In my limited experience, the need to read tests will have declined greatly. (All of the reasons in the previous paragraph will have much less force.) If the business representative is still reading tests, it’s much more likely to be pro forma or a matter of habit: once-necessary scaffolding that hasn’t yet been taken down.

Because process is so hard to remove, I suspect that everyone involved will only tardily (or never) take the step of saying “We don’t need cucumber tests. We don’t need to write the fixturing code that adapts the rhetorically friendly test steps to actual test APIs called by actual test code.” People will keep writing this:

… when they could be writing something like this:

That latter (or something even more unit-test-like) makes tests into actual code that fits more nicely into the programmer’s usual flow.

How often is my suspicion true? How often do people keep doing rhetoric-heavy tests long after the rhetoric has ceased being useful?

Even after all these years, I take too-big steps while coding. In less finicky languages (or finicky languages with good tool support), I can get away with it, but I can’t do that in C++ (a language I’ve been working in the past two weeks). So I’ve had to think more explicitly about taking baby steps. And that’s made me realize that there must be some refactoring literature out there that I’ve overlooked or forgotten. That literature would talk about composing small-scale refactorings into medium-scale changes. The only example I can think of is Joshua Kerievsky’s Refactoring to Patterns. I like that book a lot, but it’s about certain types of medium-scale refactorings (those that result in classic design patterns), and there are other, more mundane sorts.

Let me give an example. This past Friday, I was pairing with Edward Monical-Vuylsteke on what amounts to a desktop GUI app. Call the class in question Controller, as it’s roughly a Controller in the Model-View-Controller pattern. Part of what Controller did was observe what I’ll call a ValueTweaker UI widget. When the user asked for a change to the value (that is, tweaked some bit of UI), the Controller would observe that, cogitate about it a bit, and then perhaps tell an object deeper in the system to make the corresponding change in some hardware somewhere.

That was the state of things at the end of Story A. Story B asked us to add a second ValueTweaker to let the user change a different hardware value (of the same type as the first, but reachable via a wildly different low-level interface). The change from “one” to “two” was a pretty strong hint that the Controller should be split into three objects of two classes:

A ValueController that would handle only the task of mediating between a ValueTweaker on the UI and the hardware value described in story A.

A second ValueController that would do the same job for story B’s new value. The difference between the two ValueControllers wouldn’t be in their code but in which lower-level objects they’d be connected to.

A simplified Controller that would continue to do whatever else the original Controller had done.

The question is: how to split one class into two? The way we did it was to follow these steps (if I remember right):

Create an empty ValueController class with an empty ValueController test suite. Have Controller be its subclass. All the Controller tests still pass.

One by one, move the Controller tests that are about controlling values up into the ValueController test suite. Move the code to make them pass.

When that’s done, we still have a Controller object that does everything it used to do. We also have an end-to-end test that checks that a tweak of the UI propagates down into the system to make a change and also propagates back up to the UI to show that the change happened.

Change the end-to-end test so that it no longer refers to Controller but only to ValueController.

Change the object that wires up this whole subsystem so that it (1) creates both a Controller and ValueController object, (2) connects the ValueController to the ValueTweaker and to the appropriate object down toward the hardware, and (3) continues to connect the Controller to the objects that don’t have anything to do with changing the tweakable value. Confirm (manually) that both tweaking and the remaining Controller end-to-end behaviors work.

Since it no longer uses its superclass’s behavior, change Controller so that it’s no longer a subclass of ValueController.

Change the wire-up-the-whole-subsystem object so that it also makes story B’s vertical slice (new ValueTweaker, new ValueController, new connection to the hardware). Confirm manually.

I consider that a medium-scale refactoring. It’s not something that even a smart IDE can do for you in one safe step, but it’s still something that (even in C++!) can easily be finished in less than an afternoon.

So: where are such medium-scale refactorings documented?