Exploration Through Example

Example-driven development, Agile testing, context-driven testing, Agile programming, Ruby, and other things of interest to Brian Marick

| 191.8 | ⇒ | 167.2 | ⇒ | 186.2 | 183.6 | 184.0 | 183.2 | 184.6 |

Exploration Through ExampleExample-driven development, Agile testing, context-driven testing, Agile programming, Ruby, and other things of interest to Brian Marick

|

Fri, 19 Dec 2003Agile Project Management with Scrum I'm reading a prepublication copy of Ken Schwaber's Agile Project Management with Scrum. It rocks. Stories of real projects, just like I like. Watch for it.

## Posted at 17:57 in category /agile

[permalink]

[top]

Open source automated test tools written in Java Carlos E. Perez has a nice list of tools.

## Posted at 10:08 in category /misc

[permalink]

[top]

Joe Bergin's Do the Right Thing pattern. (Warning: humor.)

## Posted at 10:02 in category /misc

[permalink]

[top]

[Updated to get Alan Green's name right.] Charles Miller talks about decorating programmer tests to check for things like resource leaks. Alan Green chimes in. I met Charles Simonyi briefly on Tuesday and, weirdly enough, he had the same idea (with an aspect-ish flavor). A clever idea, plus it's a trigger for me to go meta. On the airplane Saturday, I highlighted this sentence from Latour and Woolgar's Laboratory Life: The Construction of Scientific Facts: ... the solidity of this object... was constituted by the steady accumulation of techniques (p. 127 of the 2nd edition, 1986). The object in question was Thyrotropin Releasing Factor (TRF), which is something you have inside you. Latour and Woolgar give a story of how TRF went from being a name given to something hypothetically present in one kind of unpurified glop to, specifically, Pyro-Glu-His-Pro-NH2. Now, you might say that TRF was always a solid object - that Pyro-Glu-His-Pro-NH2 always existed, whether or not any person knew of it. Fine. But with respect to people, TRF didn't exist until the end of an enormous and time-consuming effort that yielded both a formula and, eventually, a Nobel prize. After that effort, other researchers could depend on that structure with unreflective certainty, and manufacturers could manufacture TRF in bulk rather than extracting minute quantities from slaughtered sheep. Latour and Woolgar say that the sheer mass of diverse techniques applied - mass spectrometry, skilled technicians performing bioassays, computer algorithms, techniques for writing persuasive papers, and so forth - made TRF into a solid object people can use for their purposes. I don't particularly care about TRF. I read this kind of stuff to give me ideas about what I do. And part of what I do is help construct facts. You see, people make things in the world. Some of those things are very concrete: bridges. Some of them are very abstract: democracy. Both kinds of things have power in the world, and both are solid: the idea of democracy is as resistant to attack as the Golden Gate Bridge is to weather and tides. I can interpret Latour and Woolgar as implying that democracy is not just an idea; rather, it's built from the techniques used to implement it. Robert's Rules of Order make parliamentary democracy. They're not merely one set of adornments around its unchanging essence. Similarly, object-oriented programming is not just programming with languages that provide inheritance, polymorphism, and encapsulation. It's built from how people use those languages - from design patterns, from CRC cards, from old talk about finding objects by underlining the nouns in a problem description, from even older practices of using OO languages for simulation, from the push toward ever-more rapid iteration and feedback, from allowing exceptions to be stashable objects rather than transient stack transfers, and so forth. All these things - some of which don't seem in any way a part of any abstract essence of OO - nevertheless make up the solid notion we share. What postings like Charles's and Alan's signify to me is that programmer testing is rapidly solifying into a new fact in the world. For the first time, it's approaching the reality of the Golden Gate Bridge. Weird, huh? And hope-making.

## Posted at 06:45 in category /testing

[permalink]

[top]

Wed, 17 Dec 2003Suppose I'm using programmer-style test-first design to create a class. I want four things.

That's all pretty easy because I'm one person creating both tests and code, plus I know conventional testing. Now consider acceptance testing, the creation of what I quixotically call customer-facing checked examples. A programmer will want all the same things (plus more conversation with business experts). This is harder, it seems to me, because more people are involved. Suppose testers are creating these acceptance tests (not by themselves, but taking on the primary role, at least until the roles start blending). They have a tough job. They want to get those first tests out fast. They want to keep pumping them out as needed. They have to be both considerate of the time and attention of the business experts and also careful to keep them in charge. They must create tests of the "right size" to keep the programmers making steady, fast progress, but they also have to end up with a stream of tests that somehow makes sense as a bug-preventing suite at the end of the story. There must be a real craft to this. It's not like conventional test writing. It's more like sequencing exploratory testing, I think, but still different. Fortunately, it looks like there's a reasonable chance I'll be helping teams get better at this over the next year, and I still plan to make a point of visiting other teams that have their act together. Mail me if you're on one, or if you have good stories to tell.

## Posted at 16:18 in category /agile

[permalink]

[top]

Sun, 14 Dec 2003Walk through the walls of knowledge guilds A (the) Viridian research principle:The boundaries that separate art, science, medicine, literature, computation, engineering, and design and craft generally are not divinely ordained. The most galling of these boundaries are socially generated entities meant to protect the power-interests of knowledge guilds. This is not to say that that all research techniques are identical, or that their results are all equally valid under all circumstances: quantum physics isn't opera. But there exists a sensibility that can serenely ignore intellectual turf war, and comprehend both physics and opera. You won't be able to swing a grant or sing an aria by knocking politely at the stage door. They won't seat you at the head of the table and slaughter the fatted calf. But you can take photographs, plant listening devices and leave. If you choose, you can step outside the boundaries history makes for you. You can walk through walls.

## Posted at 16:50 in category /misc

[permalink]

[top]

Fri, 05 Dec 2003Martin Fowler writes (quoted in its entirety):

Acceptance tests are things to point at while having a conversation. The speakers will be testers, programmers, and business experts. The neatest surprise with FIT, to my mind, is the way you can turn a web page of tests into a narrative. I've mislaid my good example of this, but here's a snippet of page about an example Ward often uses: a bond trading rule that all months should be treated as having 30 days. (Note: I don't know if the following is correct - I was just playing around with FIT, not writing a real app.)

The page is organized as set of special cases, each with a brief description followed by some checked examples. I really like this style: it's an easy-to-write mini-tutorial for the programmer and reader. However, when it comes to tests that are sequences (action fixtures), I find that the tabular format grates. The table, instead of being a frame around what I read, is something that intrudes on my reading. It may be that I'm too used to code. Another difficulty is variables: such useful beasts. Think of constructing a circular data structure. Yes, you can write a parseable language to do that (Common Lisp had one, as I recall), but to my mind it's simpler just to create substructures, give them names, and use the names to lash things together. Or consider stashing results in a variable and then doing a set of checks. Or comparing two results to each other, where the issue isn't exact values but the relationships between values. You could invent variables in the FIT language, but you're starting to get into notational problems that scripting languages have already solved. That way lies madness (after inventing loops and subroutines and...) And yet, there is the niceness of HTML format. I've been toying with the idea of a free-form action-ish fixture that looked like this:

Running the test might yield this:

Here, I'm following

RoleModel's lead in

using Ruby as a somewhat customer-readable language. (<rant>And I

also fixed the backwards order of xUnit This format also allows for a mixture of scripting and tabular styles. I have some tests that look like this:

You'll notice that I indent the asserts. That's because the tests (especially the longer ones) do a lot of starting, stopping, and pausing of various interacting jobs. It's too hard to see what's going on if the the asserts aren't visually distinguished from the actions. Nevertheless, it's still pretty ugly. I showed it to a customer-type (my wife, who knows no programming languages). She understood it fine after I explained it, but it didn't thrill her. This might be better:

The fixture synthesizes all the cells into one Ruby method. Is this better? (I'm not a particularly gifted visual designer, as anyone who's looked at my main website can tell.)

A final thought. I've read a couple of papers on intentional

programming. (Can't find any good links.) It didn't click for me. I think it was the

examples. One example had a giant

The thing is, I found the mixture of C and mathematical notation

jarring. Is the equation really clearer - to a programmer

immersed in the app - than

But when we're talking about tests, we have two people who have very different cognitive styles, goals, and backgrounds reading one text. I can envision a common underlying test representation that can be switched between two modes. One is the "discuss the test with the customer" mode. The other is the "see the test as executable code" mode. Perhaps Intentional Software will take up Martin's challenge. (If so, my consulting fees are quite modest...) 1 The equation shown isn't the one used in the paper, not unless there's been an amazing coincidence.

## Posted at 16:41 in category /testing

[permalink]

[top]

Tue, 02 Dec 2003And now, a break for silliness.

## Posted at 17:58 in category /junk

[permalink]

[top]

Mon, 01 Dec 2003Debugging, thinking, logging: how much of each? Charles Miller quotes two authors on opposite sides of the debate over whether programmer tests eliminate the need for a debugger. I'm on the "I hardly ever use a debugger" side, but perhaps that's only because programmers perk up when I say I don't even know how to invoke the debugger in my favorite language (Ruby). Since it's my job to make programmers perk up, it's not in my interest to be a debugging fiend. The debate made me think of a third approach, or perhaps a complement to the other two, that doesn't get the press it deserves. Let's step into the Wayback Machine... It's 1984, the height of the AI boom. Expert systems are all the rage. The company I worked for hatched the idea that what builders of flight simulators (the kind that go inside big domes filled with hydraulics) really wanted was... Lisp. (I love Lisp, but this was not the savviest marketing decision.) They cast around for someone who knew Lisp. I'd played around with it for a week. That qualified me to be technical lead. Dan was a quite good C programmer and happy on strange, out-of-the-way projects. Sylvia was a half-time graduate student who knew Fortran and Prolog. In the end, we produced the best Lisp in the world, if by "best" you mean "quality of the final product divided by how much the team started out knowing about Lisp". Actually, it wasn't bad. I'm pleased with what we accomplished. It wasn't really that momentous an accomplishment, though. There was a free Lisp-in-Lisp implementation from Carnegie-Mellon. It ran on a machine called the Perq, whose main feature was user-programmable microcode. CMU had microcoded up a Lisp-machine-like bytecode instruction set, and their compiler produced bytecodes for it to execute. So we got a good start by coding up an interpreter (virtual machine) for the same instruction set. We just used C instead of microcode. I did the infrastructure (garbage collector, etc.) and Sylvia did most of the bytecodes. I now get to the point... Early on, I decided on a slogan for my code: "no bug should be hard to find the second time". Whenever a bug was hard to find, I wrote whatever debug support code would have made that kind of bug easy to find. Over time, the system turned into something that was eminently debuggable. Snap your fingers, and it told you what was wrong. The things I did were very situated: they depended on the bug. But one thing I did was add a lot of logging. By letting the bugs drive where I put in logging statements, I avoided cluttering up the code too much. I remain a big fan of logging, and I'm distressed that the logging you see is so often so useless to anyone but the original author. Resources:

The use of logging makes debuggers less necessary. Instead of single-stepping to figure out how on earth the program got to a point, you look at the log. If the logging is well-placed, and you have decent logging levels, you don't get mired in detail. Having said that, it doesn't seem that logging is that useful to me in programmer tests. I don't need to know how the tests got somewhere. It's more useful in acceptance tests, where more is happening before the point of failure. Still, I rarely find myself looking at the log. It's most useful when trying to diagnose a bug not found by an automated test. Such bugs could be found by users or by exploratory testing. (Because exploratory testing is rather free-form, the log can help remind you of what you did when it's time to replicate a bug.) One logging tip for large systems: I had a great time once doing exploratory testing of a big java system that had decent logging. I'd dink around with the GUI, but have the scrolling log open in another window. Every so often, something interesting would flash by in the log: "Look! The main event loop just swallowed a NullPointerException!" That would reveal to me that I'd tickled something that had no grossly obvious effect on the external interface. It was then my job to figure out how to make it have a grossly obvious effect.

## Posted at 21:18 in category /misc

[permalink]

[top]

Fri, 28 Nov 2003I'm looking for really exemplary programmer tests (unit tests, whatever) to discuss in a class. The tests should be for a substantial product, something that's been put to real use. (It could be a whole product or some useful package.) I favor Java examples, though I'll also take C#, C++, C, Ruby, or Python. The example should be open source, or I should get special permission to use it. I'd really like to be able to run the tests, not just read them. If you know of such a thing, tell me. Thanks.

## Posted at 16:49 in category /testing

[permalink]

[top]

Thu, 27 Nov 2003Coding standards (and a little on metaphors) Somewhere around 1983, I shot my mouth off one time too many and found myself appointed QA Manager for a startup. I'm sure I would have been ineffectual no matter what - I didn't have the technical credibility nor personal skills for the job. The moment I realized I was doomed was probably in the middle of a rambunctious company-wide argument about a coding standard. I still have bad dreams about where to put the curly braces in C code. Bill Caputo has a posting on coding standards. What I'll remember from it is a slogan I just made up: Coding standards are about the alignment of teams, not the consistency of code. Where were you when I needed you, Bill? I also quite like Bill's earlier posting about consistency. My thoughts on consistency and completeness are moving in an odd direction, it seems. For example, I'm fond of Lakoff and Johnson's thesis that reasoning is metaphorical. So I think that our understanding of Understanding is freighted with the metaphor UNDERSTANDING IS SEEING. That changes the way we look for (ahem) understanding. Some time ago, I started wondering why I have such a visceral sense of whether a system of thought is complete and consistent. Some of them simply seem whole, and that feeling is important to me. Why? Lakoff and Johnson say, "We are physical beings, bounded and set off from the rest of the world by the surface of our skins... Each of us is a container, with a bounding surface and an in-out orientation." (p. 29) Quite a lot of reasoning is based on metaphors of the form X IS A CONTAINER, and it seems like I'm using the CONCEPTUAL SYSTEM IS A CONTAINER metaphor. And I think others are, too. But why should a conceptual system be a container? Why should it have an inside and an outside? So I'm actively on the lookout for systems that are partial, fuzzy, inconsistent - but nevertheless useful.

## Posted at 12:23 in category /misc

[permalink]

[top]

I know her premise, but I too often forget it in the heat of the moment. Maybe if I write it 500 times, it will become a habit. I'll start with one time:

## Posted at 10:12 in category /misc

[permalink]

[top]

## Posted at 09:42 in category /testing

[permalink]

[top]

Wed, 26 Nov 2003I believe in social trends. The trend these days, certainly in the USA, is to cry 'Havoc,' and let slip the dogs of war. You see it in politics, you hear it on the radio, and - I believe - it's increasingly common in the software world. (Not that it was unknown before, mind you - I think net.flame was created in 1982.) Too many people's ideas are being wrongly discounted because of who they are, who they associate with, who they have sympathy for, what other ideas they have, or other trivia. I don't think I can persuade anyone to sip from the half-full glass if they prefer to smash it because it's half empty. But I have been on the lookout for tools that can help those inclined to take that sip. (Yeesh, that's self-righteous. Sorry.) One I found is based on Richard Rorty's notion of a "final vocabulary". I invite you to read my essay on it.

## Posted at 11:45 in category /misc

[permalink]

[top]

Mon, 24 Nov 2003Here are some thoughts about my topics and exercises for the Master of Fine Arts in Software trial run. They are tentative. The topics are driven by a position I take. It is that requirements do not represent the problem to be solved or the desires of the customers. Further, designs are not a refinement of the requirements, for practical purposes. Neither is code. And tests don't represent anything either. Rather, all artifacts (including conversation with domain experts) are better viewed as "triggers" that cause someone to do something (with varying degrees of success). Representation and refinement don't enter into it (except in the sense that we tell stories about them). So both requirements and system-level tests are ways of provoking programmers to do something satisfying. And code is something that, when later programmers have to modify it, triggers them to do it in a more useful or less useful way. In practical terms, I am thinking of covering these topics:

## Posted at 08:05 in category /misc

[permalink]

[top]

Fri, 14 Nov 2003In praise of Hiroshi Nakamura, and the Ruby community I'm writing an article about doing exploratory testing of APIs using Ruby. The article shows how to test the Google web services API, so I'm using Hiroshi Nakamura's SOAP4R package. I found and reported a bug in that package. 23 minutes later, he replied with a patch. A reviewer of the article had a question about proxy servers. Less than 20 minutes later, he had a response. Other emails to Hiroshi have led to similarly pleasant results. Hiroshi is emblematic of the best spirt of the Ruby community. One thing I noticed right off the bat when learning Ruby is how helpful the Ruby community is. I hope we can keep it up. (I'm feeling really regretful that I didn't make it to the RubyConf this year.)

## Posted at 19:36 in category /misc

[permalink]

[top]

Thu, 13 Nov 2003Christian Sepulveda on coaching Christian Sepulveda writes what I want to call a mission statement for agile coaching, except that "mission statement" is too often a synonym for "vacuous", which this is not.

Were I starting as a coach, I'd hand Christian's essay out to the team and say, "Here. Tell me when I don't live up to this." Christian follows the mission statement with some practical guidelines. He talks about identifying and understanding stakeholders. At PLoP, Jeff Patton walked us through a two-hour example of doing just that, using a variant of Constantine/Lockwood style usage-centered design. Shameless plug: Jeff has written an article on that topic for STQE magazine. It'll be out in the January issue.

## Posted at 11:02 in category /agile

[permalink]

[top]

Wed, 12 Nov 2003New blogger Jonathan Kohl has a different way of explaining my four categories of agile testing. He uses a tree instead of a 2-way matrix.

I think I like his approach better than mine. It provides an appealing sequence for presenting the ideas. The root of the tree sets the stage. The first two branches emphasize the importance of engaging with two worlds:

Then you introduce another distinction: between testing as support for other people during their work and testing as a critique of something those people have completed. You can dive into the details according to the interests of the audience: "which of these do you want to talk about?" I may give this tree a try some time, although I'm still fond of "business-facing product critique". Rolls so elegantly off the tongue, don't you think? Calgary, Canada - where Jonathan lives - is, by the way, a real hotbed of agile testing activity. It also happens to be where XP Agile Universe will be next year. Let's hope a lot of agile testers come.

## Posted at 12:48 in category /agile

[permalink]

[top]

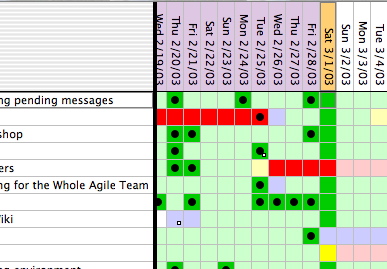

Sat, 08 Nov 2003

I sent my first email in 1975 (maybe 1976), and I think I'm well past the knee in the exponential curve. If I've been tardy about responding to your email, now you know why. Alack, alas: I'm a helpless victim of the laws of nature.

## Posted at 16:24 in category /misc

[permalink]

[top]

Thu, 06 Nov 2003I find the following quote (from Laurent Bossavit, part of a longer post) pretty evocative, though I can't quite say yet what it evokes.

Blogs on testing from Microsoft (via Tim van Tongeren): Sara Ford, Josh Ledgard, and Joe Bork.

## Posted at 07:43 in category /misc

[permalink]

[top]

Wed, 05 Nov 2003Alan Francis and Christian Sepulveda comment on my note about Agile Alliance public relations. Via Jason Kottke, Esther Derby has a wonderful set of guidelines for learning. I much like Esther's addition: "make the most generous possible interpretation". Ben Hyde has a discussion of what happens as firms get ahead of their customers. The point of this chart is that over time a firm's product offerings begin to outstrip the ablity of the customers to absorb all the functionality the product offers.

## Posted at 08:09 in category /misc

[permalink]

[top]

Sun, 02 Nov 2003A new role within the Agile Alliance Completely to my surprise, I was elected vice-chair of the Agile Alliance nonprofit at the recent board meeting. I think this marks the definitive tipping point: I'm no longer a testing person interested in agile methods. I'm an agile methods person interested in testing. I'm an odd duck, in that I am both attracted to the revolutionary, the iconoclastic, yet also want to be - and be seen as - reasonable and sensible. My first main task will be one that combines the two desires. The agile methods are, I think, well-established among the enthusiast and visionary segments of the technical communities. Left to themselves, the agile programmers, testers, and line managers would keep successfully pushing agile methods into the mainstream. Where the agile methods are not established is among the ranks of the CIOs, CFOs, CEOs. That's sad, because one of the things that first struck me about the agile methods was the fervor with which rank techies seemed to care about things like ROI and making the "gold owners" truly happy. That was certainly something I'd not seen before. And yet the CxOs don't know that. The message they hear from the heavyweight competitors to the agile methods is that agile projects are havens for mad hackers who can't be trusted. Or they hear the message that no kind of development really works, so you might as well get the inevitable dissatisfaction for 1/5 the salary cost by going offshore. So the technical communities are not being left to themselves. We have to counter those messages. The agile methods need better PR directed at the executive suite. I'm the chair of a committee within the Agile Alliance board. Within a month, we are to deliver a proposed approach and budget for better PR. If you have ideas to suggest, please send them to me. Lord knows that speaking to CxOs is not my strong suit.

## Posted at 11:25 in category /agile

[permalink]

[top]

Tue, 21 Oct 2003I downloaded a copy of Intellij IDEA a few days before Ward Cunningham and I were scheduled to do a presentation on test-first programming at PNSQC. I must say that it's the only IDE that hasn't made me run screaming back to Emacs. It seems, however, kind of flaky on the Mac. The first time I launched it, it wouldn't let me dismiss one of the floating windows, but it's worked fine all later times. It's crashed a few times, but without losing anything. And, literally just before our presentation, we wanted to enlarge the font. I'm still not quite sure how we did it, but we changed the font instead. The result:

Which menu do you think has the command that takes you to a declaration? And when we pulled down a menu, we got something with this information content: ðððð Fortunately, we had presence of mind, Emacs, and we remembered that the little green arrow on the right meant "run the FIT tests". So we edited code in Emacs, ran tests with IDEA, and a good time was had by all. And we later had Andy Tinkham to help Ward get the JUnit tests working in Eclipse while I pontificated to distract the audience. Not the most auspicious day for IDEA, but I'd still use it for Java coding if I could get some employer to buy it for me. At the current price, with the Mac flakiness, it doesn't quite cut it for this independent consultant. (It was not too hard to figure out how to correct the problem - just not in the heat of the moment, with maybe 100 people watching. So I have a usable copy for the next few days.) (We were also using Word to write FIT tables. Word crashed during the presentation. Moreoever, we stripped off all Word's toolbars to remove clutter. Now, when I get home, I discover that I cannot add back the Drawing toolbar. When I do, Word just hangs. I guess I have nothing better to do than reinstall.) (Seems harsh to be more snarkish to Microsoft than to Jetbrains, makers of IDEA. But I bet Jetbrains would trade some goodwill for monopoly positioning and a huge cash hoard, some of which could be usefully spent on Word for the Macintosh, which is - on my machine - markedly buggier than the Windows version. Please do better, Redmond guys.)

## Posted at 09:13 in category /misc

[permalink]

[top]

Mon, 06 Oct 2003If you subscribed to this blog's RSS feed but never got any updates, it's because I used a bad relative link. It caused you to only get updates to category "blog". This is the first one ever. Change your subscription to this: http://www.exampler.com/old-blog/index.rss If you have been getting updates, don't change anything. Sorry.

## Posted at 19:59 in category /blog

[permalink]

[top]

Sun, 05 Oct 2003Agile testing directions: Postscript

The last part (hurray!) of a series Thus ends my essay on where agile testing is going and should go. I want to reemphasize that I fully expect I'll look back on it in five years and think "How naïve". That's always been the case in the past. Why should the future be different? I like being wrong, as long as the wrongness is a step along a productive path. I feel that way about this essay. I feel good about the direction my work will now take me. I hope this flood of words is also useful to others.

## Posted at 09:19 in category /agile

[permalink]

[top]

Via Keith Ray, something from Jim Little about continuous learning. A practical approach that I've not seen before.

## Posted at 09:19 in category /misc

[permalink]

[top]

Sat, 04 Oct 2003Agile testing directions: Testers on agile projects

Part 7 of a series Should there be testers on agile projects? First: what's the alternative? It is to have non-specialists (programmers, business experts, technical writers, etc.) perform the activities I've identified in this series: helping to create guiding examples and producing product critiques. Or, symmetrically, it's to have testers who do programming, business analysis, technical writing, etc. It's to consider "testing" as only one set of skills that needs to be available, in sufficient quantity, somewhere in the team, to service all the tasks that require those skills. Why would non-specialists be a bad idea? Here are some possible reasons:

Argument Let me address minimum required skills and comparative advantage first. These arguments seem to me strongest in the case of technology-facing product critiques like security testing or usability testing. On a substantial project, I can certainly see the ongoing presence of a specialist security tester. On smaller projects, I can see the occasional presence of a specialist security tester. (The project could probably not justify continual presence.) As for the exploratory testers that I'm relying on for business-facing product critiques, I'm not sure. So many of the bugs that exploratory testers (and most other testers) find are ones that programmers could prevent if they properly internalized the frequent experience of seeing those bugs. (Exploratory testers - all testers - get good in large part because they pay attention to patterns in the bugs they see.) A good way to internalize bugs is to involve the programmers in not just fixing but also in finding them. And there'll be fewer of the bugs around if the testers are writing some of the code. So this argues against specialist testers. Put it another way: I don't think that there's any reason most people cannot have the minimum required exploratory testing skills. And the argument from comparative advantage doesn't apply if mowing your lawn is good basketball practice. That doesn't say that there won't be specialist exploratory testers who get a team up to speed and sometimes visit for check-ups and to teach new skills. It'd be no different from hiring Bill Wake to do that for refactoring skills, or Esther Derby to do that for retrospectives. But those people aren't "on the team". I think the same reasoning applies to the left side of the matrix - technology-facing checked examples (unit tests) and business-facing checked examples (customer tests). I teach this stuff to testers. Programmers can do it. Business experts can do it, though few probably have the opportunity to reach the minimum skill level. But that's why business-facing examples are created by a team, not tossed over the wall to one. In fact, team communication is so important that it ought to swamp any of the effects of comparative advantage. (After all, comparative advantage applies just as well to programming skills, and agile projects already make a bet that the comparative advantage of having GUI experts who do only GUIs and database experts who do only databases isn't sufficient.) Now let's look at innate aptitude. When Jeff Patton showed a group of us an example of usage-centered design, one of the exercises was to create roles for a hypothetical conference paper review system. I was the one who created roles like "reluctant paper reviewer", "overworked conference chair", and "procrastinating author". Someone remarked, "You can tell Brian's a tester". We all had a good chuckle at the way I gravitated to the pessimistic cases. But the thing is - that's learned behavior. I did it because I was consciously looking for people who would treat the system differently than developers would likely hope (and because I have experience with such systems in all those roles). My hunch is that I'm by nature no more naturally critical than average, but I've learned to become an adequate tester. I think the average programmer can, as well. Certainly the programmers I've met haven't been notable for being panglossian, for thinking other people's software is the best in this best of all possible worlds. But it's true an attack dog mentality usually applies to other people's software. It's your own that provokes the conflict of emotional interest. I once had Elisabeth Hendrickson doing some exploratory testing on an app of mine. I was feeling pretty cocky going in - I was sure my technology-facing and business-facing examples were thorough. Of course, she quickly found a serious bug. Not only was I shocked, I also reacted in a defensive way that's familiar to testers. (Not harmfully, I don't think, because we were both aware of it and talked about it.) And I've later done some exploratory testing of part of the app while under a deadline, realized that I'd done a weak coding job on an "unimportant" part of the user interface, then felt reluctant to push the GUI hard because I really didn't want to have to fix bugs right then. So this is a real problem. I have hopes that we can reduce it with practices. For example, just as pair programming tends to keep people honest about doing their refactoring, it can help keep people honest about pushing the code hard in exploratory testing. Reluctance to refactor under schedule pressure - leading to accumulating design debt - isn't a problem that will ever go away, but teams have to learn to cope. Perhaps the same is true of emotional conflict of interest. Related to emotional conflict of interest is the problem of useful ignorance. Imagine it's iteration five. A combined tester/programmer/whatever has been working with the product from the beginning. When exploring it, she's developed habits. If there are two ways to do something, she always chooses one. When she uses the product, she doesn't make many conceptual mistakes, because she knows how the product's supposed to work. Her team's been writing lots of guiding examples - and as they do that, they've been building implicit models of what their "ideal user" is like, and they have increasing trouble imagining other kinds of users. This is a tough one to get around. Role playing can help. Elisabeth Hendrickson teaches testers to (sometimes) assume extreme personae when testing. What would happen if Bugs Bunny used the product? He's a devious troublemaker, always probing for weakness, always flouting authority. How about Charlie Chaplin in Modern Times: naïve, unprepared, pressured to work ever faster? Another technique that might help is Hans Buwalda's soap opera testing. It's my hope that such techniques will help, especially when combined with pairing (where each person drives her partner to fits of creativity) in a bullpen setting (where the resulting party atmosphere will spur people on). But I can't help but think that artificial ignorance is no substitute for the real thing. Staffing So. Should there be testers on an agile project? Well, it depends. But here's what I would like to see, were I responsible for staffing a really good agile team working on an important product. Think of this as my default approach, the prejudice I would bring to a situation.

Are there testers on this team, once it jells? Who cares? - there will be good testing, even though it will be increasingly hard to point at any activity and say, "That. That there. That's testing and nothing but." Disclaimers

## Posted at 12:20 in category /agile

[permalink]

[top]

Thu, 02 Oct 2003Master of Fine Arts in Software Richard Gabriel has been pushing the idea of a Master of Fine Arts in Software for some time. It now looks as if the University of Illinois is seriously considering the idea of offering such a degree (which they would prefer to call "Master of Software Arts"). There is likely to be a trial run in early January. If that goes well, the next step is a full-fledged University program. A software MFA would be patterned after a non-residential MFA. Twice a year, students would come to campus for about ten days. They'd do a lot of work. They'd get a professor with whom they'd work closely-but-remotely for the next six months. Repeat several times. An MFA will differ from conventional software studies in several ways:

Gabriel says: The way that the program works is for each student to spend a lot of time explicitly thinking about the craft elements in any piece of software, design, or user interface... It is this explicit attention to matters of craft that matures each student into an excellent software practitioner. Ralph Johnson and I are the local organizers, also two of the instructors. We're looking for students. This first trial run, we're especially looking for people with lots of experience and reputation. If those people get value, everyone can. And if they say they got value, the future program will get lots of students. Who wouldn't want to attend a program that people like Ward Cunningham or Dave Thomas or Andy Hunt or Martin Fowler or Mike Clark or Michael Feathers or Eric Evans or Paul Graham said was worth their time? I've set up a yahoogroups called software-mfa. You can subscribe by sending mail to software-mfa-subscribe@yahoogroups.com. As you can see, my efforts to become a hermit and not get involved in organizing things are proceeding well. But this is a chance to make an important difference.

## Posted at 08:35 in category /misc

[permalink]

[top]

Wed, 01 Oct 2003At Ralph Johnson's suggestion, I wrote a position paper for a National Science Foundation workshop on the science of design. ("The workshop objective is to help the NSF better define the field and its major open problems and to prioritize important research issues.") On the principle that no good deed should go unblogged, here's a PDF copy of my position paper, "A Social Science of Design". My position is that one Science of Design should be a science of people doing design... more akin to anthropology or social studies of scientific practice than to physics... A successful research program would win the Jolt software productivity award as well as help someone gain tenure. The last time I was at a "how should government fund software research" workshop, I had absolutely no effect with a similarly iconoclastic and populist proposal. If I'm accepted this time, we'll see if my presentation and argumentation skills have improved in the last two decades. (They can't have gotten worse.)

## Posted at 08:13 in category /misc

[permalink]

[top]

Sat, 27 Sep 2003Champaign-Urbana Agile study group Some of us have been talking about creating an Agile methods study group for the C-U area. We want to get started. To that end, I'll be giving a preview performance of a talk on agile testing I'll be giving at the Pacific Northwest Software Quality Conference. It's titled "A Year in Agile Testing", but it might be better titled "Where I Think Testing in Agile Projects is Going". Readers of my "agile testing directions" series will know what to expect. (Scroll down from here for an abstract.) The talk is sized to fit a keynote slot, so it's about 1.5 hours long (including question time). Afterwards, we'll take off to a restaurant and set up the next study group session.

When: Thursday, October 2, 5:30 PM Motorola wants to know roughly how many people are coming, so let me know if you are. (Yes, I know I was going to become a hermit.)

## Posted at 17:33 in category /agile

[permalink]

[top]

Thu, 25 Sep 2003Agile testing directions: technology-facing product critiques

Part 6 of a series As an aid to conversation and thought, I've been breaking one topic, "testing in agile projects," into four distinct topics. Today I'm finishing the right side of the matrix with product critiques that face technology more than the business. I've described exploratory testing as my tool of choice for business-facing product critiques. But while it may find security problems, performance problems, bugs that normally occur under load, usability problems (like suitability for color-blind people), and the like, I wouldn't count on it. Moreover, these "ilities" or non-functional or para-functional requirements tend to be hard to specify with examples. So it seems that preventing or finding these bugs has been left out of the story so far. Fortunately, there's one quadrant of the matrix left. (How convenient...) The key thing, I think, about such non-functional bugs is that finding them is a highly technical matter. You don't just casually pick up security knowledge. Performance testing is a black art. Usability isn't a technical subject in the sense of "requiring you to know a lot about computers", but it does require you to know a lot about people. (Mark Pilgrim's Dive Into Accessibility is a quick and pleasant introduction that hints at how much richness there is.) Despite what I often say about agile projects favoring generalists, these are areas where it seems to me they need specialists. If security is important to your project, it would be good to have visits from security experts, people who have experience with security in many domains. (That is, security knowledge is more important than domain knowledge.) These people can teach the team how to build in security as well as test whether it has in fact been built in. (It's interesting: my impression is that these fields have less of a separation between the "design" and "critique" roles than does straight functional product development. It seems that Jakob Nielsen writes about both usability design and usability testing. The same seems true of security people like Gary McGraw and maybe Bruce Schneier, although James Whittaker seems to concentrate on testing. I wonder if my impression is valid? It seems less true of performance testers, though the really hot-shot performance testers I know seem to me perfectly capable of designing high-performance systems.) So it seems to me that Agility brings nothing new to the party. These specialties exist, they're developed to varying degrees, they deserve further development, and their specialists have things well in hand. It may be a failure of imagination, but I think they should continue on as they are.

It seems I've finished my series about future directions for Agile testing. But there remains one last installment: do I think, in the end, that there should be testers in Agile projects? It's a hot issue, so I should address it.

## Posted at 16:06 in category /agile

[permalink]

[top]

Wed, 24 Sep 2003Agile testing directions: business-facing product critiques

Part 5 of a series As an aid to conversation and thought, I've been breaking one topic, "testing in agile projects," into four distinct topics. Today I'm starting to write about the right side of the matrix: product critiques. Using business-facing examples to design products is all well and good, but what about when the examples are wrong? For wrong some surely will be. The business expert will forget some things that real users will need. Or the business expert will express needs wrongly, so that programmers faithfully implement the wrong thing. Those wrongnesses, when remembered or noticed, might be considered bugs, or might be considered feature requests. The boundary between the two has always been fuzzy. I'll just call them 'issues'. How are issues brought to the team's attention?

These feedback loops are tighter than in conventional projects because agile projects like short iterations. But they're not ideal. The business experts may well be too close to the project to see it with fresh and unbiased eyes. Users often do not report problems with the software they get. When they do, the reports are inexpert and hard to act upon. And the feedback loop is still less frequent than an agile project would like. People who want instant feedback on a one-line code change will be disappointed waiting three months to hear from users. For that reason, it seems useful to have some additional form of product critique - one that notices what the users would, only sooner. The critiquers have a resource that the people creating before-the-fact examples do not: a new iteration of the actual working software. When you're describing something that doesn't exist yet, you're mentally manipulating an abstraction, an artifact of your imagination. Getting your hands on the product activates a different type of perception and judgment. You notice things when test driving a car that you do not notice when poring over its specs. Manipulation is different than cogitation. So it seems to me that business-facing product critiques should be heavy on manipulation, on trying to approach the actual experience of different types of users. That seems to me a domain of exploratory testing in the style of James Bach, Cem Kaner, Elisabeth Hendrickson, and others. (I have collected some links on exploratory testing, but the best expositions can be found among James Bach's articles.) Going forward, I can see us trying out at least five kinds of exploratory testing:

For each of these, we should explore the question of when the tester should be someone from outside the team, someone who swoops in on the product to test it. That has the advantage that the swooping tester is more free of bias and preconceptions, but the disadvantage that she is likely to spend much time learning the basics. That will skew the type of issues found. When I first started talking about exploratory testing on agile projects, over a year ago, I had the notion that it would involve both finding bugs and also revealing bold new ideas for the product. One session would find both kinds of issues. For a time, I called it "exploratory learning" to emphasize this expanded role. I've since tentatively concluded that the two goals don't go together well. Finding bugs is just too seductive - thinking about feature ideas gets lost in the flow of exploratory testing. Some happens, but not enough. So I'm thinking there needs to be a separate feature brainstorming activity. I have no particularly good ideas now about how to do that. "More research is needed."

## Posted at 14:01 in category /agile

[permalink]

[top]

Tue, 23 Sep 2003I don't pair program much. I'm an independent consultant, I live at least 500 miles (1000 kilometers) from almost all my clients, and I can't be on-site for more than a quarter of the time. (This was easier to pull off during the Bubble.) So it's hard to get the opportunity to pair. Most usually when I pair, one or the other of us knows the program very well. But once, when I was pairing with Jeremy Stell-Smith, neither of us knew the program that well. And I got an interesting feeling: I didn't feel confident that I really had a solid handle on the change we were making, and I didn't feel confident that Jeremy did either, but I did feel confident - or more confident - that the combination of Jeremy, me, and the tests did. It was a weird and somewhat unsettling feeling. That reminds me now of something Ken Schwaber said in Scrum Master training - that one of the hardest things for a Scrum Master to do is to sit back, wait, and trust that the team can solve the problem. It's trust not in a single person, but in a system composed of people, techniques, and rules. All this came to mind when I read a speech by Brian Eno describing what he calls "generative music". I don't think it's too facile to say that his composition style is to conventional composition as agile methods are to conventional software development. (Well, maybe it is facile, but perhaps good ideas can result from the comparison.) They both involve setting up a system, letting it rip, observing the results without attempting to control the process, and tweaking in response. There is, again, a loss of control that I like intellectually but still sometimes find unsettling. Here's that other Brian:

Sounds cool, right? But then there's this, where he demos a composition. Remember, he only puts in rules and starting conditions, then lets the thing generate on its own:

You, dear reader, may not have ever done a live demo. But if you have, I bet Eno's experience hits home: "Observe this!... um, it usually works... (Gut clenches)" Surely agile projects run into this problem at a slower scale: "We're going to self-organize, be generative, we're a complex adaptive system, just watch... um, it usually works. (Gut clenches)" Agile development involves bets. (The XP slogan "You aren't going to need it" should really be stated "On average, you'll need it seldom enough that the best bet is that you won't need it".) Sometimes the bet doesn't pay off. I believe that, over the course of most decent-sized projects, it will. But surely there will be single iterations that collapse into silence. I don't think enough is said about how to cope with that.

## Posted at 20:14 in category /agile

[permalink]

[top]

Sat, 20 Sep 2003The philosopher Ian Hacking on definitions, in a section called "Don't First Define, Ask for the Point" in his The Social Construction of What? (pp. 5-6): ... Take 'exploitation'. In a recent book about it, Alan Wertheimer does a splendid job of seeking out necessary and sufficient conditions for the truth of statements of the form 'A exploits B'. He does not quite succeed, because the point of saying that middle-class couples exploit surrogate mothers, or that colleges exploit their basketball stars on scholarships... is to raise consciousness. The point is less to describe the relation between colleges and stars than to change how we see those relations. This relies not on necessary and sufficient conditions for claims about exploitation, but on fruitful analogies and new perspectives.In that light, consider definitions of "agile methods", "agile testing", "exploratory testing", "testability", and the like: what's the point of making the definition? What change is the maker trying to encourage? The Poppendiecks on the construction analogy and lean construction (via Chris Morris):

Malcolm Nicolson and Cathleen McLaughlin, in "Social constructionism and medical sociology: a study of the vascular theory of multiple sclerosis" (Sociology of Health and Illness, Vol. 10 No. 3, 1988, p. 257 [footnote 15]): In order for technical knowledge to [be given credit] it has to be able to move people as well as things. Laurent Bossavit on models: But just because diagrams and models have abstraction in common isn't enough to call diagrams models.

## Posted at 09:58 in category /misc

[permalink]

[top]

Mon, 15 Sep 2003Christian Sepulveda on "What qualifies as an agile process?" I feel these guidelines offer a different perspective than the elements of the manifesto. For example, communication and collaboration are desirable because they promote discovery and provide feedback. As I consider the experiences I would characterize as "agile", I am better able to articulate their "agility" in terms of these guidelines. Martin Fowler on application boundaries I don't think applications are going away for the same reasons why application boundaries are so hard to draw. Essentially applications are social constructions. Michael Hamman has comments on my earlier post about "the reader in the code". His description of how musicians make commentaries on musical scores is fascinating. I want to see it sometime, mine it for ideas. Michael also elaborates on his earlier post on breakdowns. I'll have to ponder that for a while. Finally, amidst today's posturing and self-righteous certainty - the conversion of real events into mere fodder for argument - a reminder of reflexive unity of people in the moment.

## Posted at 10:57 in category /misc

[permalink]

[top]

Sat, 13 Sep 2003This year's Pattern Languages of Programs conference is over. (I was program chair.) Thanks to everyone who attended and made it work. For me, the highlight of the conference was when Jeff Patton led some of us through a two hour example of his variant of Constantine/Lockwood style usage-centered design. I also liked Linda Rising's demonstration of a Norm Kerth style retrospective (applied to PLoP itself). At Richard Gabriel's suggestion, we brought in Linda Elkin, an experienced teacher of poets. She and Richard (also a poet, as well as Distinguished Engineer at Sun) taught us a great deal about clarity in writing. Finally, Bob Hanmer and Cameron Smith stepped up to keep the games tradition of PLoP alive. As you can see, PLoP is no ordinary conference. Thus ends my summer of altruistically organizing (or under-organizing) people. I need a break. So I'm going to do what I do when I need a break: write code. I'll be spending half my non-travelling time building a new app. Since I'm now an expert on usage-centered design (that's a, I say, that's a joke, son), I'm going to start with that. But wait... we've been talking about starting a local Agile study group, and I think that's really important, and I bet it wouldn't be that much work... P.S. The postings on agile testing directions will continue. Just don't expect me to organize a conference on the topic.

## Posted at 09:23 in category /misc

[permalink]

[top]

Fri, 05 Sep 2003The Marquis de Sade and project planning I dabble in science studies (a difficult field to define, so I won't try) partly because it causes me to read weird stuff. Last year, I read "Sade, the Mechanization of the Libertine Body, and the Crisis of Reason", by Marcel Henaff 1. Here's a quote about Sade's obsession with numerical descriptions of body parts and orgies: It as if excessive precision was supposed to compensate for the rather obvious lack of verisimilitude of the narrated actions. The same could be said of most project plans. Affix this quote to the nearest Pert chart. 1 In Technology and the Politics of Knowledge, Feenberg & Hannay eds., 1995.

## Posted at 14:32 in category /misc

[permalink]

[top]

Agile testing directions: business-facing team support

Part 4 of a series As an aid to conversation and thought, I've been breaking one topic, "testing in agile projects," into four distinct topics. Today I'm writing about how we can use business-facing examples to support the work of the whole team (not just the programmers)1. I look to project examples for three things: provoking the programmers to write the right code, improving conversations between the technology experts and the business experts, and helping the business experts more quickly realize the possibilities inherent in the product. Let me take them in turn.

One of my two (maybe three) focuses next year will be these business-facing examples. I've allocated US$15K for visits to shops who use them well. If you know of such a shop, please contact me. After these visits (and after paid consulting visits and after practicing on my own), I want to be able to tell stories:

Only when we have a collection of such stories will the practice of using business-facing examples be as well understood, be as routine, as is the practice of technology-facing examples (aka test-driven design). 1 I originally called this quadrant "business-facing programmer support". It now seems to me that the scope is wider - the whole team - so I changed the name. 2 I confess I've only read parts of Eric's book, in manuscript. The final copy is in my queue. I think I've got his message right, though.

## Posted at 14:04 in category /agile

[permalink]

[top]

Fri, 29 Aug 2003Christian Sepulveda writes about comments in code. Not all comments are bad. But they are generally deodorant; they cover up mistakes in the code. Each time a comment is written to explain what the code is doing, the code should be re-written to be more clean and self explanatory. That reminded me of the last time someone mentioned to me that some code needed comments. That was when that someone and I were looking at Ward Cunningham's FIT code. The code made sense to me, but it didn't to them. You could say that's just because I've seen a lot more code, but I think that's not saying enough. My experience makes me a particular kind of code reader, one who's primed to get some of the idioms and ideas Ward used. I knew how to read between the lines. Let me expand on that with a different example. Here's some C code:

int fact(int n) { // caller must ensure n >= 0

int result = 1;

for (int i = 2; i <= n; i++)

result *= i;

return result;

}

I think a C programmer would find that an unsurprising and unobjectionable implementation. Suppose now that I transliterate it into Lisp:

(defun fact(n) ; caller must ensure (>= n 0)

(let ((result 1))

(do ((i 2 (+ i 1)))

((> i n))

(setq result (* result i)))

result))

This code, I claim, would have a different meaning to a Lisp

programmer. When reading it, questions would flood her mind. Why

isn't the obvious recursive form used? Is it for efficiency? If so,

why aren't the types of the variables declared? Am I looking at this

because a C programmer wrote the code, someone who doesn't "get" Lisp?

A Lisp programmer who cared about efficiency would likely use an optional argument to pass along intermediate results. That would look something like this:

(defun fact(n (result-so-far 1)) ; caller must ensure (>= n 0)

(if (<= n 1)

result-so-far

(fact (- n 1)

(* result-so-far n))))

(I left out variable declarations.) Unless my 18-year-old memories of

reading Lisp do

me wrong, I'd read that function like this:

(defun fact(n (result-so-far 1))

"OK, looks like an accumulator argument. This is probably going to be recursive..." (if (<= n 1) result-so-far

"Uh-huh. Base case of the recursion." (fact (- n 1)

"OK. Tail-recursive. So she wants the compiler to turn the recursion into a loop. Either speed is important, or stack depth is important, or she's being gratuitously clever. Let's read on." With that as preface, let me both agree and disagree with Christian. I do believe that code with comments should often be written to be more self-explanatory. But code can only ever be self-explanatory with respect to an expected reader. Now, that in itself is kind of boringly obvious. What's obvious to you mightn't be obvious to me if we've had different experiences. And the obvious consequences aren't that exciting either: The more diverse your audience, the more likely you'll need comments. Teams will naturally converge on a particular "canonical reader", but perhaps that process could be accelerated if people were mindful of it. We could do more with the idea. The line by line analysis I gave above was inspired by the literary critic Stanley Fish. He has a style of criticism called "affective stylistics". In it, you read something (typically a poem) word by word, asking what effect each word (and punctuation mark, and line break...) will have on the canonical reader's evolving interpretation of the poem. To Fish, the meaning of the poem is that evolution. I don't buy this style of criticism, not as a total solution, but it's awfully entertaining and I have this notion that people practiced in it might notice interesting things about code. Affective stylistics is part of a whole branch of literary criticism (for all I know, horribly dated now) called "reader-response criticism". There are many different approaches under that umbrella. I've wanted for a few years to study it seriously, apply it to code, and see what happened. But, really, it seems unlikely I'll ever get the time. If there's any graduate student out there who, like me at one time, has one foot in the English department and one in the Computer Science department, maybe you'll give it a try. (Good luck with your advisor...) And maybe this is something that could fit under the auspices of Dick Gabriel's Master of Fine Arts in Software, if that ever gets established. Recommended reading:

## Posted at 15:31 in category /misc

[permalink]

[top]

Thu, 28 Aug 2003Michael Feathers on "Stunting a framework": The next time you are tempted to write and distribute a framework, run a little experiment. Imagine the smallest useful set of classes you can create. Not a framework, just a small seed, a seedwork. Design it so that it is easy to refactor. Code it and then stop. Can you explain it to someone in an hour? Good. Now, can you let it go? Can you really let it go? Christian Sepulveda on "Testers and XP: Maybe we are asking the wrong question": ... there are other agile practices that address these other concerns and work in harmony with XP. Scrum is the best example. Scrum is about project management, not coding. When I am asked about the role of project managers in XP, I suggest Scrum. I like Christian's idea of finding a style of testing that's agile-programmer-compatible in the way that Scrum is a style of management that's agile-programmer-compatible. It feels like a different way of looking at the issue, and I like that feeling. Michael Hamman talks of Heidegger and Winograd&Flores in "Breakdown, not a problem": Because sometimes our flow needs to be broken - we need to be awoken from our "circumspective" slumber. This notion underlies many of the great tragedies, both in literature and in real life. We are going along in life when something unexpected, perhaps even terrible, occurs. Our whole life is thrown into relief - all of the things, the people, and qualities that we once took for granted suddenly become meaningful and important to us. Our very conscious attitude toward life shifts dramatically. Something to think about: what sort of breakdowns would improve your work? I'm wondering - help me out, Michael - how to fit my talk of test maintenance (within this post) into the terminology of Heidegger and Winograd&Flores. The typical response to an unexpectedly broken test is to fix it in place to match the new behavior. Can I call that circumspective? I prefer a different response, one that makes distinctions: is this one of those tests that should be fixed in place, or is it one that should be moved, or one that should be deleted? Is that attending to a breakdown? And should we expect that a habit of attending to that kind of breakdown would lead to (first) an explicit and (over time) a tacit team understanding of why you do things with tests? And does that mean that handling broken tests would turn back into the fast "flow" of circumspective behavior? Cem Kaner's "Software customer's bill of rights" Greg Vaughn comments on my unit testing essay. Terse and clear: a better intro to the idea than I've managed to write. (Note how he puts an example - a story - front and center. That's good writing technique.)

## Posted at 09:39 in category /misc

[permalink]

[top]

Wed, 27 Aug 2003Agile testing directions: technology-facing programmer support

Part 3 of a series As an aid to conversation and thought, I've been breaking one topic, "testing in agile projects," into four distinct topics. Today I'm writing about how we can use technology-facing examples to support programming. One thing that fits here is test-driven development, as covered in Kent Beck's book of same name, David Astel's more recent book, and forthcoming books by Phlip, J.B. Rainsberger, and who knows who else. I think that test-driven development (what I would now call example-driven development) is on solid ground. It's not a mainstream technique, but it seems to be progressing nicely toward that. To use Geoffrey Moore's term, I think it's well on its way to crossing the chasm. (Note: in this posting, when I talk of examples, I mean examples of how coders will use the thing-under-development. In XP terms, unit tests. In my terms, technology-facing examples.) Put another way, example-driven development has moved from being what Thomas Kuhn called "revolutionary science" to what he called "normal science". In a normal science, people expand the range of applicability of a particular approach. So we now have people applying EDD (sic) to GUIs, figuring out how it works with legacy code, discussing good ways to use mock objects, having long discussions about techniques for handling private methods, and so forth. Normal science is not the romantic side of science; it's merely where ideas turn into impact on the world. So I'm glad to see we're there with EDD. But normality also means that my ideas for what I want to work on or see others work on... well, they're not very momentous.

I said above that test-driven development is "one thing that fits" today's topic. What else fits? I don't know. And is EDD the best fit? (Might there be a revolution in the offing?) I don't know that either - I'll rely on iconoclasts to figure that out. I'm very interested in listening to them.

## Posted at 15:22 in category /agile

[permalink]

[top]

Sat, 23 Aug 2003Jim Weirich writes on stripping out the text from a program, leaving only the "line noise" (punctuation). He notes: What I find interesting is the amount of semantic information that still comes through the "line noise". For example, the "#<>" sequence in the C++ code is obviously an include statement for something in the standard library and the "<<" are output statements using "cout". That reminds me of something that Ward Cunningham described. He called them "signature surveys". It's a really sweet idea, even more impressive when you see him demo it.

## Posted at 11:23 in category /misc

[permalink]

[top]

Fri, 22 Aug 2003Agile testing directions: tests and examples

Part 2 of a series 'It all depends on what you mean by home.' In my first posting, I drew this matrix:

Consider the left to right division. Some testing on agile projects, I say, is done to critique a product; other testing, to support programming. But the meaning and the connotations of the word "testing" differ wildly in the two cases. When it comes to supporting programming, tests are mainly about preparing and reassuring. You write a test to help you clarify your thinking about a problem. You use it as an illustrative example of the way the code ought to behave. It is, fortunately, an example that actively checks the code, which is reassuring. These tests also find bugs, but that is a secondary purpose. On the other side of the division, tests are about uncovering prior mistakes and omissions. The primary meaning is about bugs. There are secondary meanings, but that primary meaning is very primary. (Many testers, especially the best ones, have their identities wrapped up in the connotations of those words.) I want to try an experiment. What if we stopped using the words "testing" and "tests" for what happens in the left side of the matrix? What if we called them "checked examples" instead? Imagine two XP programmers sitting down to code. They'll start by constructing an incisive example of what the code needs to do next. They'll check that it doesn't do it yet. (If it does, something's surely peculiar.) They'll make the code do it. They'll check that the example is now true, and that all the other examples remain good examples of what the code does. Then they'll move on to an example of the next thing the code should do. Is there a point to that switch, or is it just a meaningless textual substitution? Well, you do experiments to find these things out. Try using "example" occasionally, often enough that it stops sounding completely weird. Now: Does it change your perspective at all when you sit down to code? Does it make a difference to walk up to a customer and ask for an example rather than a test? Add on some adjectives: what do motivating, telling, or insightful examples look like, and how are they different from powerful tests? ("Powerful" being the typical adjective-of-praise attached to a test.) Is it easier to see what a tester does on an XP project when everyone else is making examples, when no one else is making tests? Credit: Ward Cunningham added the adjective "checked". I was originally calling them either "guiding" or "coaching" examples.

## Posted at 14:38 in category /agile

[permalink]

[top]

Thu, 21 Aug 2003... But I think these arguments, while valid, have missed another vital reason for direct developer-customer interaction - enjoyment... ... they had never before realized that physical space could have such a subtle impact on human behavior... ... An idiom, in natural language, is a ready-made expression with a specific meaning that must be learned, and can't necessarily be deduced from the terms of the expression. This meaning transposes easily to programming languages and to software in general... ...It's a mirror, made of wood...

## Posted at 16:01 in category /misc

[permalink]

[top]

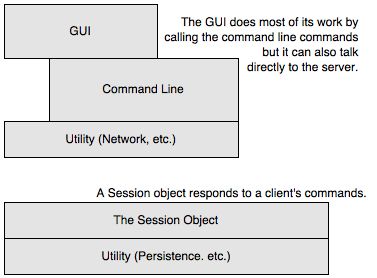

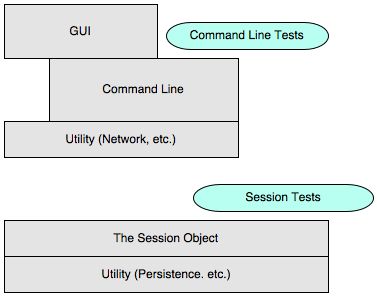

At XP Agile Universe, two people - perhaps more - told me that I'm not doing enough to aid the development of Agile testing as a discipline, as a stable and widely understood bundle of skills. I spend too much time saying I don't know where Agile testing will be in five years, not enough pointing in some direction and saying "But let's see if maybe we can find it over there". They're probably right. So this is the start of a series of notes in which I'll do just that. I'm going to start by restating a pair of distinctions that I think are getting to be fairly common. If you hear someone talking about tests in Agile projects, it's useful to ask if those tests are business facing or technology facing. A business-facing test is one you could describe to a business expert in terms that would (or should) interest her. If you were talking on the phone and wanted to describe what questions the test answers, you would use words drawn from the business domain: "If you withdraw more money than you have in your account, does the system automatically extend you a loan for the difference?"

A technology-facing test is one you describe with words drawn from the

domain of the programmers: "Different browsers

implement Javascript differently, so we test whether our

product works with the most important ones." Or:

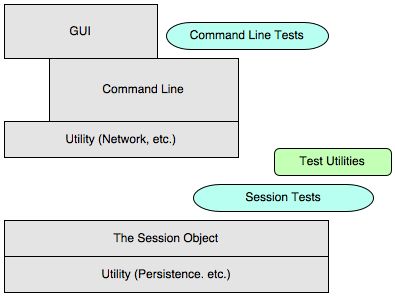

" (These categories have fuzzy boundaries, as so many do. For example, the choice of which browser configurations to test is in part a business decision.) It's also useful to ask people who talk about tests whether they want the tests to support programming or critique the product. By "support programming", I mean that the programmers use them as an integral part of the act of programming. For example, some programmers write a test to tell them what code to write next. By writing that code, they change some of the behavior of the program. Running the test after the change reassures them that they changed what they wanted. Running all the other tests reassures them that they didn't change behavior they intended to leave alone. Tests that critique the product are not focused on the act of programming. Instead, they look at a finished product with the intent of discovering inadequacies. Put those two distinctions together and you get this matrix:

In future postings, I'll talk about each quadrant of the matrix. What's my best guess about how it should evolve?

## Posted at 14:44 in category /agile

[permalink]

[top]

Mon, 04 Aug 2003Here's something I wrote for the member's newsletter of the Agile Alliance.

When I sent that text to Hans, he had some interesting comments that need to be written down somewhere public. So the above is by way of introduction, and here's what Hans had to say:

P.S.: Ken Schwaber (the newsletter editor) was kind enough to let me publish my blurb here even though the newsletter isn't out yet. Join the Agile Alliance and you can read it again! I quote Hans's email with permission.

## Posted at 14:21 in category /testing

[permalink]

[top]

Jonathan Kohl writes:

P.S. I'm told that Cem Kaner coined the phrase "change detector" at Agile Fusion. The idea has been widespread, but as the patterns people know, a catchy phrase matters a lot. Here we have one, one that I at least don't remember hearing before.

## Posted at 06:17 in category /agile

[permalink]

[top]

Sat, 02 Aug 2003I've been nominated for a seat on the board of the Agile Alliance. That brings back memories of the time I ran for student council in middle school. (Non-US readers: "middle school" is for the early teenage years.) A nerd is someone who could not possibly be elected to student council in any US school outside the Bronx High School of Science - a true nerd is someone so clueless that he doesn't even realize how hopeless it is to try. Let's just say I didn't get enough votes. Nevertheless, as I thought about the nomination I was surprised by my reaction. At the end of a summer of Altruistic Organizational Deeds that left me feeling I never want to do anything like that again, I find myself... wanting to do something like that again. The "why do I want to serve" part of the position statement below is heartfelt.

## Posted at 12:58 in category /agile

[permalink]

[top]

Fri, 01 Aug 2003Putting the object back into OOD Jim Coplien writes about teaching - and doing - object-oriented design. I don't quite get it, but I think I would like to. A taste: In the object-oriented analysis course we typified the solution component as the class structure, and the customer problem component as the Use Cases. CRC cards are the place where these two worlds come together--where struggles with the solution offer insight into the problem itself. I'd like to see an example of this style of design narrated from beginning to end. In the meantime, the article might well be of interest to people who favor prototypes over classes, prefer verbs to nouns, or are suspicious that categories really "carve nature at its joints".

## Posted at 15:12 in category /misc

[permalink]

[top]

Wed, 30 Jul 2003In early September, 2001, I was embroiled in a mailing list debate about agile methods with someone I'll call X. Here's a note I wrote on September 12, 2001:

One of my tactics in life is to publicly proclaim virtues that I then feel obliged to live up to. Lots of debates about Agility looming ahead - this public posting will force me to treat my debating opponents with charity.

## Posted at 16:28 in category /misc

[permalink]

[top]

Tue, 29 Jul 2003Evangelizing test-driven development Mike Clark has a nice posting on evangelizing test-driven development. I can vouch for his style. I taught a "testing for programmers" course from around 1994 to around 1999. I opened the course by telling how, back when I was a programmer, everyone thought I was a better programmer than my talents justified. The reason was that my tests caught so many bugs before I ever checked in. So other people didn't see those bugs. So they thought that I didn't make many.

## Posted at 14:44 in category /agile

[permalink]

[top]

Sat, 26 Jul 2003Here are some random links that started synapses firing (but to no real effect, yet):Martin Fowler on multiple canonical models: One of the interesting consequences of a messaging based approach to integration is that there is no longer a need for a single conceptual model... Martin is speaking of technical models, but I hear an echo of James Bach's diverse half measures (PDF): "use a diversity of methods, because no single heuristic always works." Any model is a heuristic, a bet that it's very often useful to think about a system in a particular way. Greg Vaughn on a source of resistance to agile methods: Agile development requires a large amount of humility. We have to trust that practices such as TDD (Test Driven Development) might lead to better software than what we could develop purely via our own creative processes. And if it doesn't then the problem might be us rather than the method. To someone whose self-image is largely centered on their own intelligence, this hits mighty close to home and evokes emotional defenses. Laurent Bossavit on exaptation: In the context of software, an exaptation consists of people finding a valuable use for the software by exploiting some implementation-level behaviour which is entirely accidental and was never anticipated as a requirement. Exaptations are interesting because I think they have to do with more than managing agreements - they're part of the process of discovering requirements as the product is being built. We have a knack for turning anything we do into an expressive medium. As a beginning driver, I was surprised to find that it was possible to blink a turn light contemptuously, or aggressively... Source code does allow one an infinite range of nuances in a restricted domain of expression: the description of problems we expect a computer to solve for us.

## Posted at 12:26 in category /misc

[permalink]

[top]

Fri, 25 Jul 2003At Agile Fusion, the team I wasn't on built some "change detectors" for Dave Thomas's weblog. If I understand correctly, they stored snapshots of sample weblogs (the HTML, I suppose). When programmers changed something, they could check what the change detectors detected. (Did what was supposed to change actually change? Did what was supposed to stay the same stay the same?) I can't say more because I didn't really pay any attention to them. Christian Sepulveda has a blog entry that finishes by paying attention to them. He writes: